SDS PaaS Cloud Platform

2020-08-14

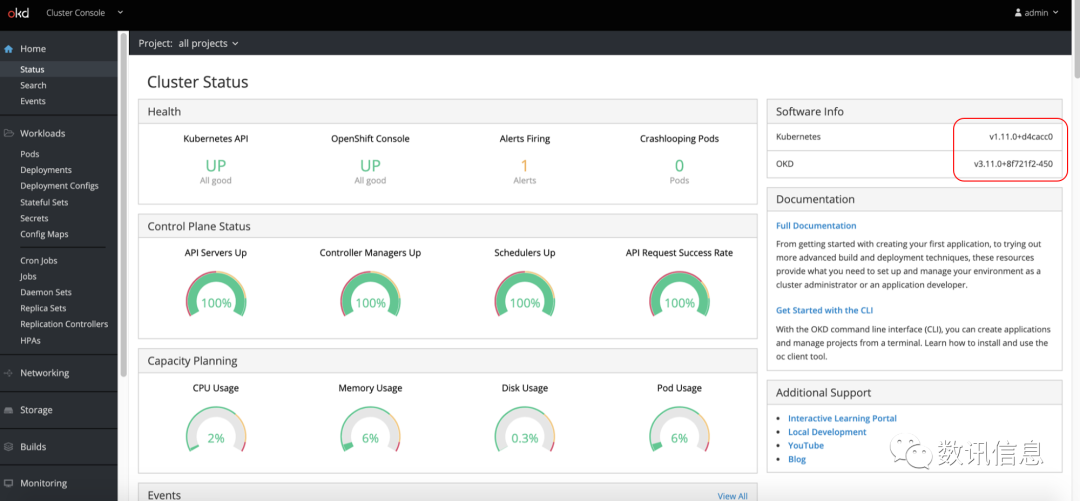

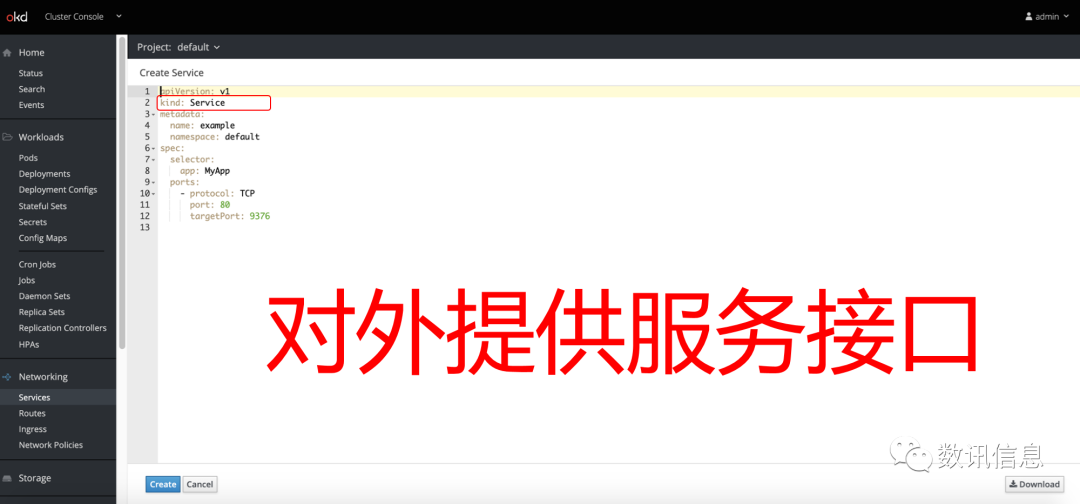

The SDS PaaS Cloud is a re-developed Cloud platform built with Docker and K8S technologies on the basis of OKD platform. It has a built-in application market that offers middleware, databases, and automated processes to enable users to speed up all tasks relating to application construction, containerization and deployment in the whole process from development to tests and go-live.

Use a visualized platform to bring users unprecedented experiences of easiness, convenience and efficiency!

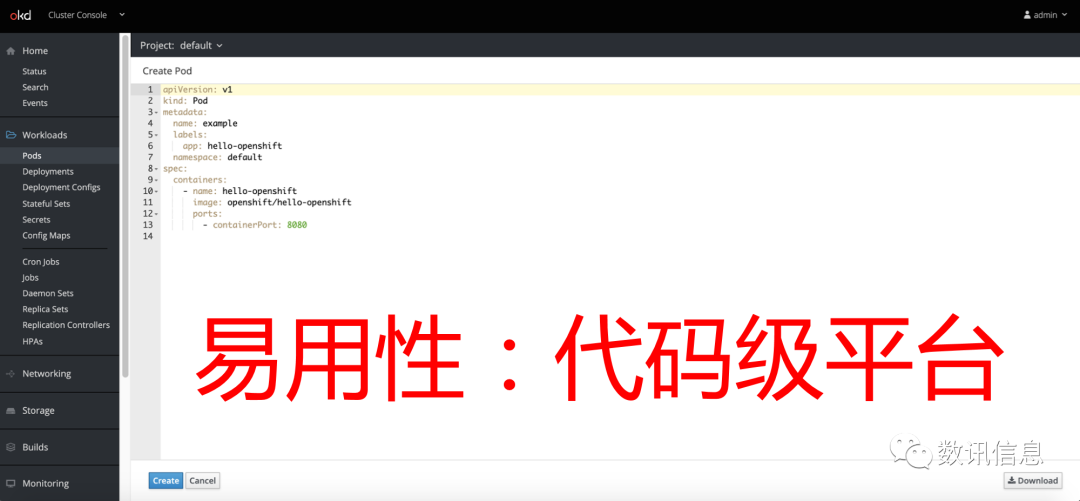

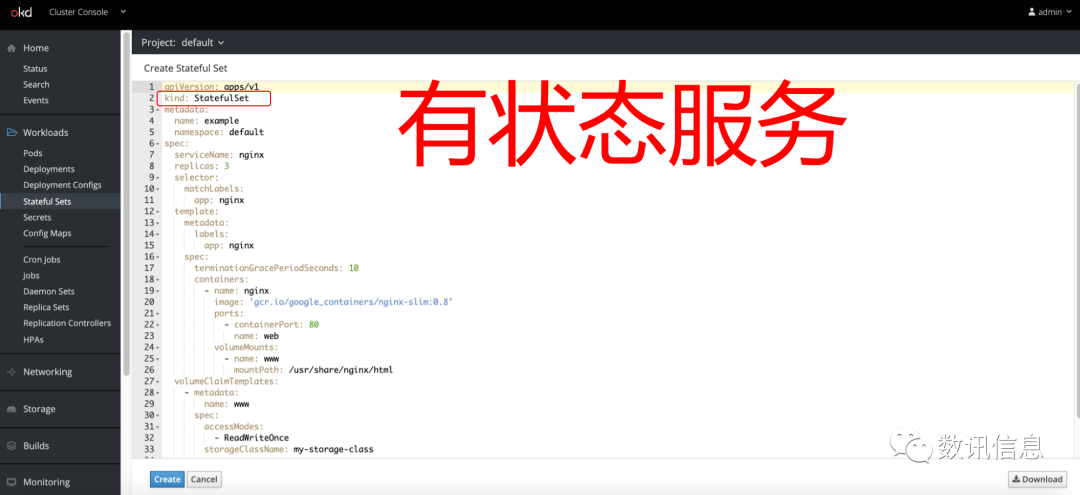

1.Visualized Desktop

❶ Significantly improve efficiency by coordinating development, test and O&M tasks on one platform;

❷ Offer great easiness and convenience whether it is building Pod, services, deplymes, or building stateful sets on the basis of stateful database.

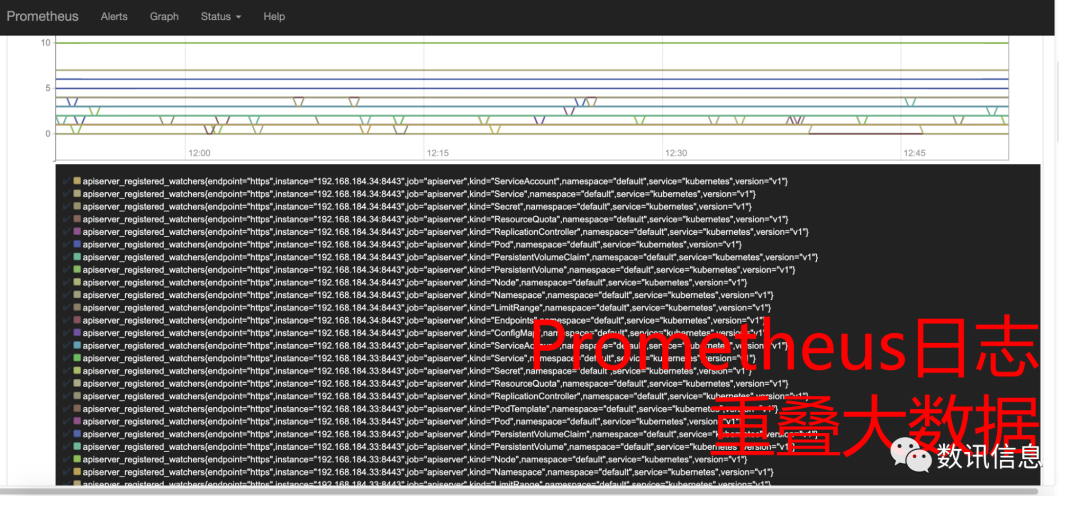

❸ In addition, provide Grafana and log Prometheus in monitoring sets, offering user the capacity of maintaining O&M and monitoring management;

❹ In addition, provide Grafana and log Prometheus in monitoring sets, offering users the capacity of maintaining O&M and monitoring management;

❺ We provide comparatively more complete catalog to offer our users a wider option range.

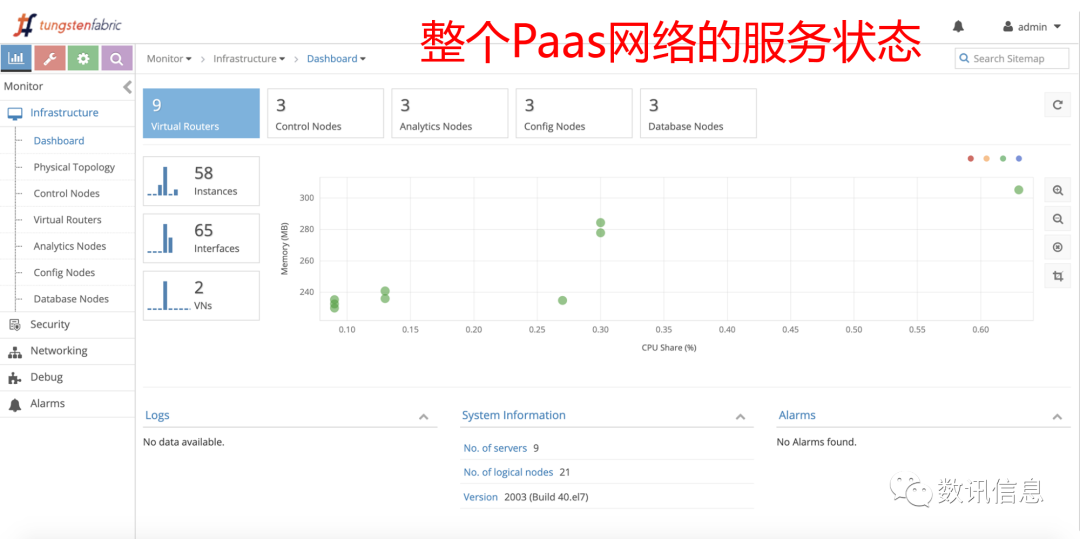

2.SaaS Level Network Support

Unlike traditional PaaS platforms, the SDS PaaS provide greater infranet support. As early as 2014, the SDS has started to study SDN technologies, e.g. opencontrail, which had been taken over by CNCF of Linux as an incubating project and renamed as astungsten fabric, with a commercial version of it had been launched under the brand name of Juniper Contrail. Currently, what the SDS is using is Tungsten Fabric version R2003.1.40.

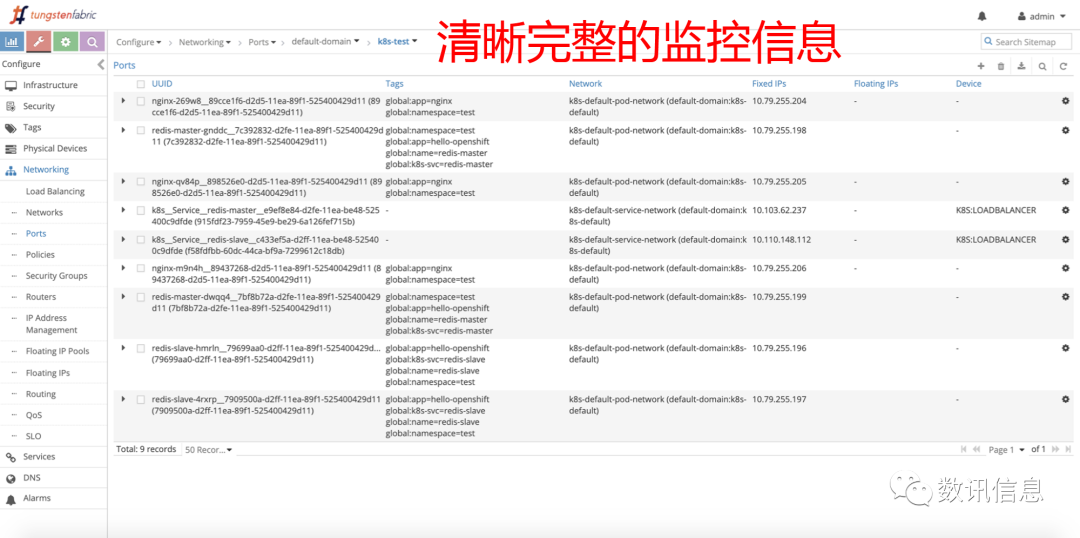

❶ PaaS Network

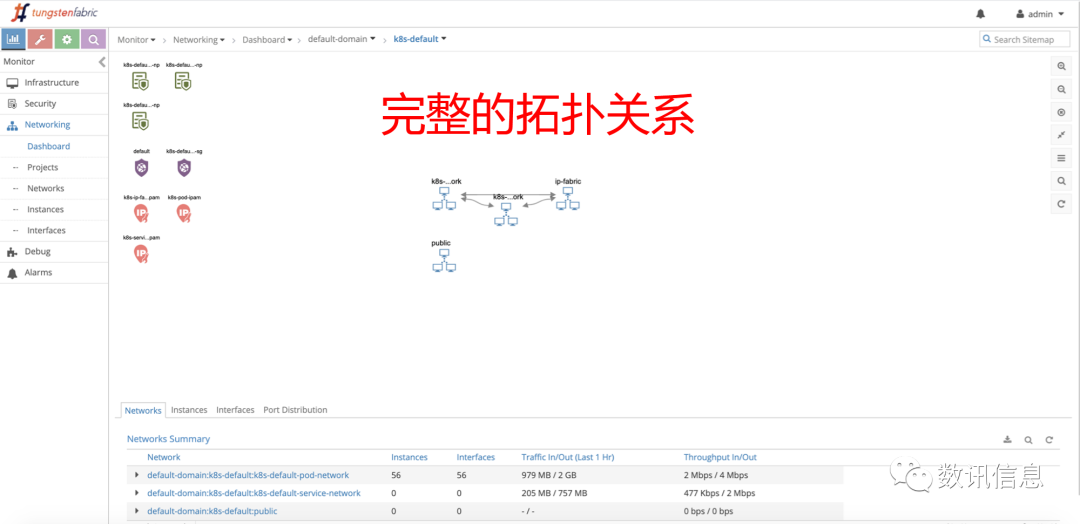

❷ Complete topological relation

❸ Clear monitoring information

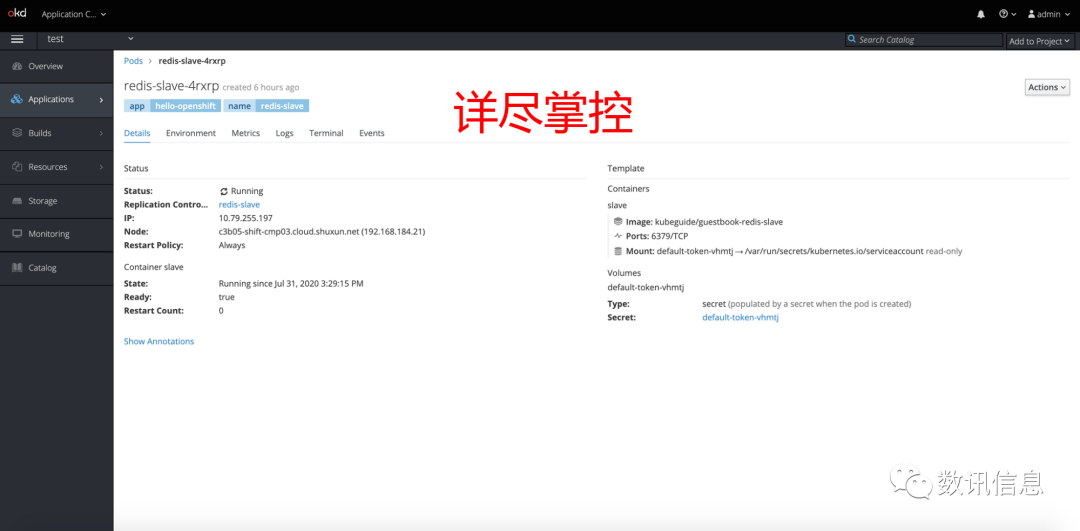

❹ Full details

❺ Some of the PaaS application scenarios put high requirements on the performance of network service and involves complicated application modes. But with SDS’s SDN, all such troubles could be ironed down with great ease.

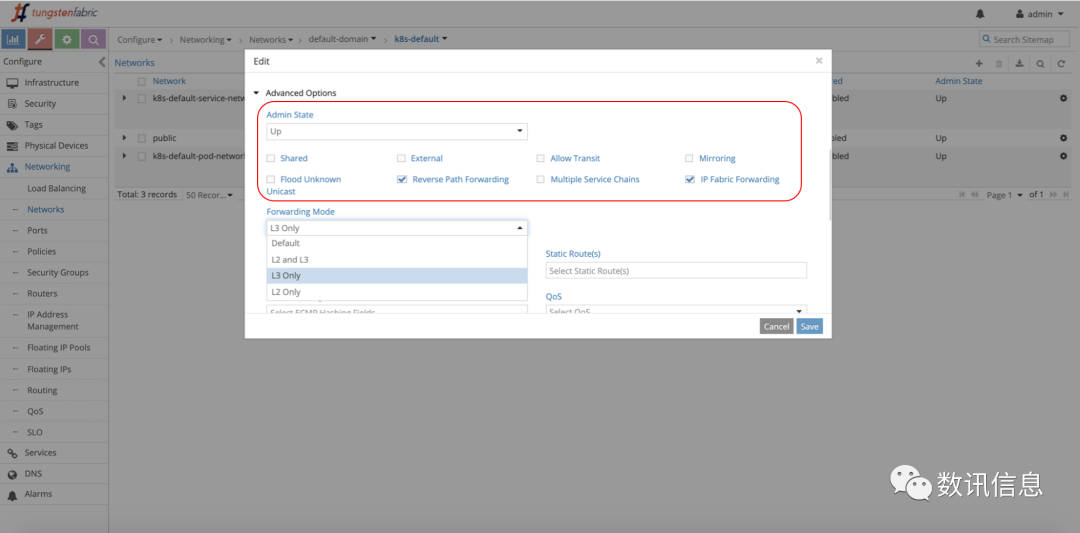

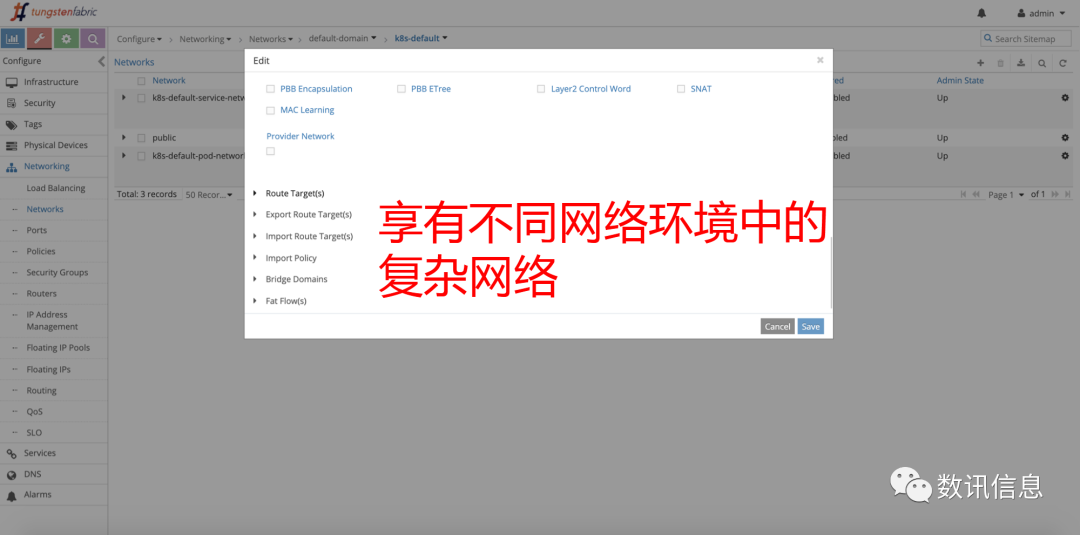

❻ We could assign to each network a totally different function set, e.g. sharing, external routing mode, transparent transmission, multi-service chain, monitoring, reverse path detection, etc. In addition to that, we could give special supports to specific environment, e.g. multicast.

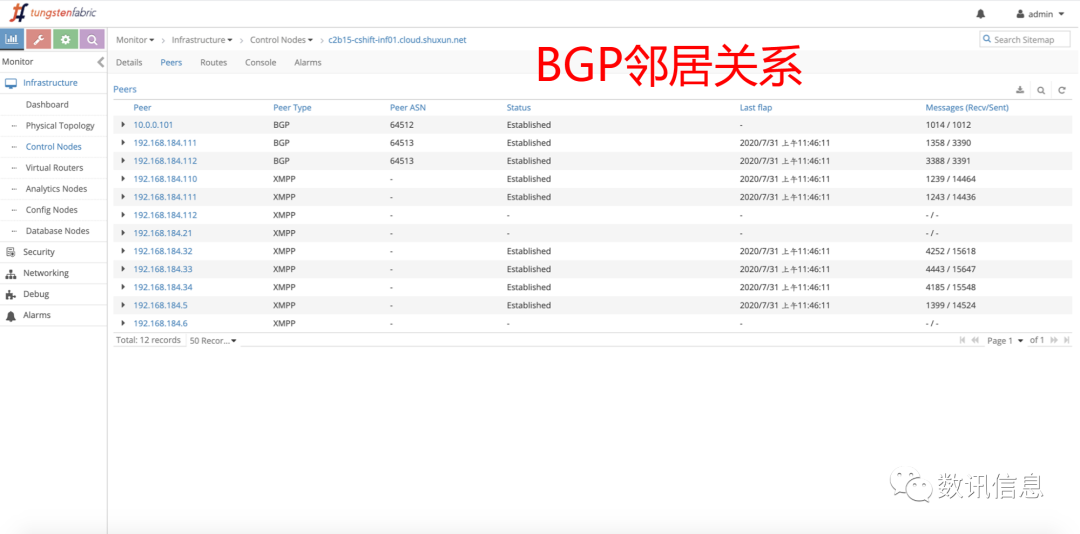

❼ Because we adopted IP-CLOS architecture (a network made of underlay and overlay structures) for our Cloud computing network, we chose to realize the inter-connection at underlay level with BGP protocols. The best part of such design is that no matter how much change the underlay network would undergo, the user of overlay network would feel almost no change. This would not only guarantee the security and stability of the leased network, but also free users from the worries of data leak or routing leak. But if it is required in some business scenarios, the user may choose to force a routing leak.

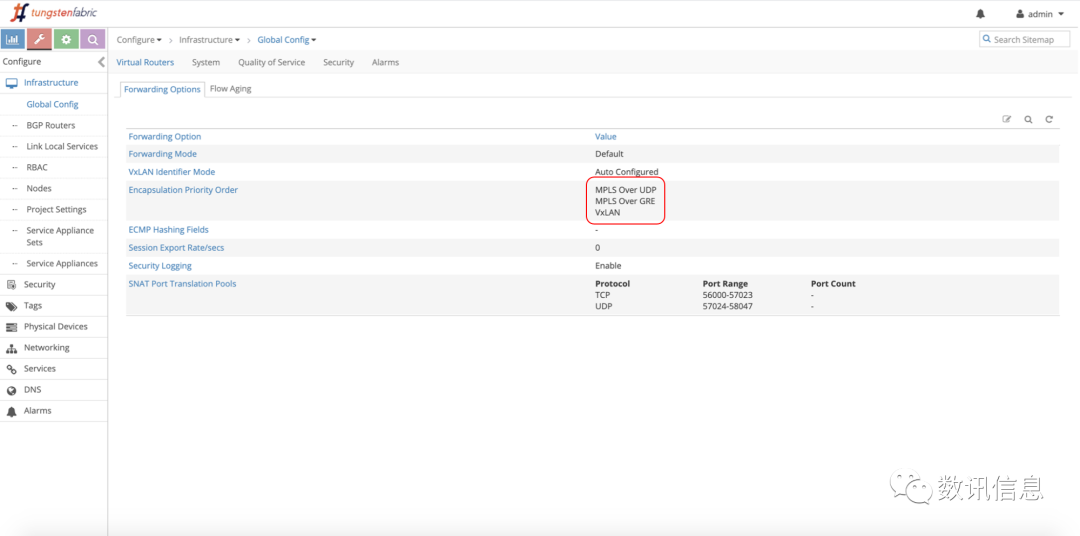

3.L2&L3 Networks

Our overlay networks provide L2 and L3 forwarding modes and encapsulation methods (e.g. VXLAN, mpls over udp, mpls over GRE). It can be seen as such a design in which the east-west traffic mode are used in a VXLAN at the L2 network, while north-south traffic UDP or GRE (where the MPLS Protocols are encapsulated) are used in a VXLAN at L3. It gives more advantages to apply the UDP method in ECMP-based multipath load environment.

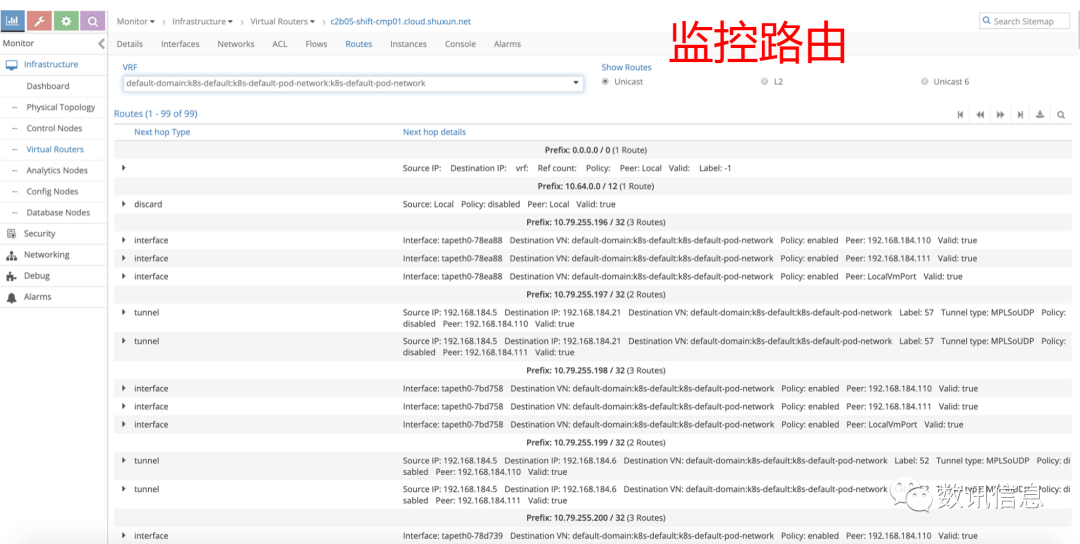

❶ Monitor Routers

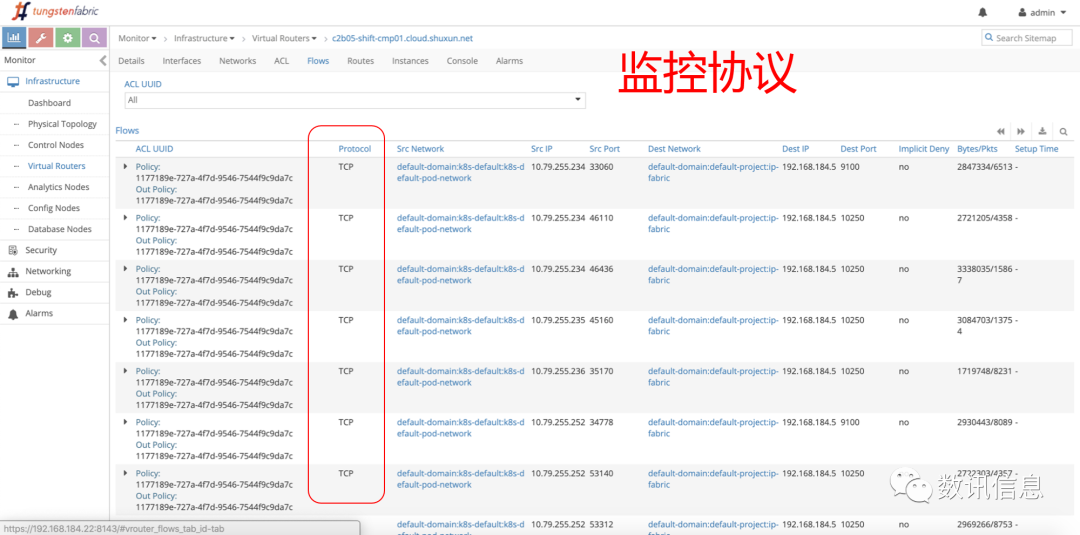

❷ Monitor Protocols

❸ BGP Neighbor Relation

Usually, it is a painful job to do troubleshooting for a Cloud computing network. But in SDS Cloud environments, it is easy to locate network problems precisely. For instance, in a PaaS or K8S environment, the management interface can give you the exact and detailed router information and flow tables according to networks or namespaces.

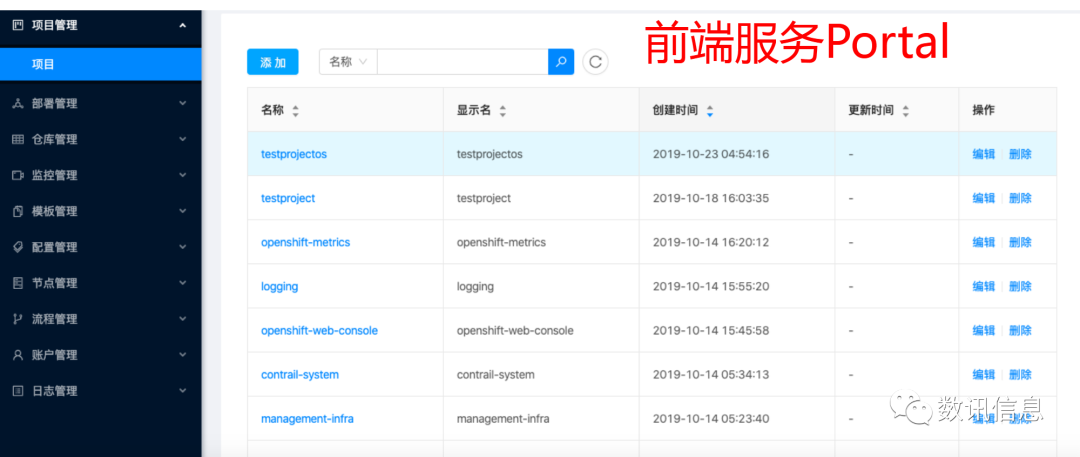

Friendly Portal Interface

To ensure easy and fast operating experience, the SDS particularly built a unique SDS-style portal interface (a user’s guide platform) through overlapping middle layers and front-end re-development to provide users with PaaS Capacity Services and, eventually, help users to achieve unified, efficient and effective management and, finally, enjoy better user’s experiences.

In addition, sometimes it’s necessary to carry out IaaS-PaaS communication at overlay level. This a great challenge to the network performance. So, how would SDS handle it?

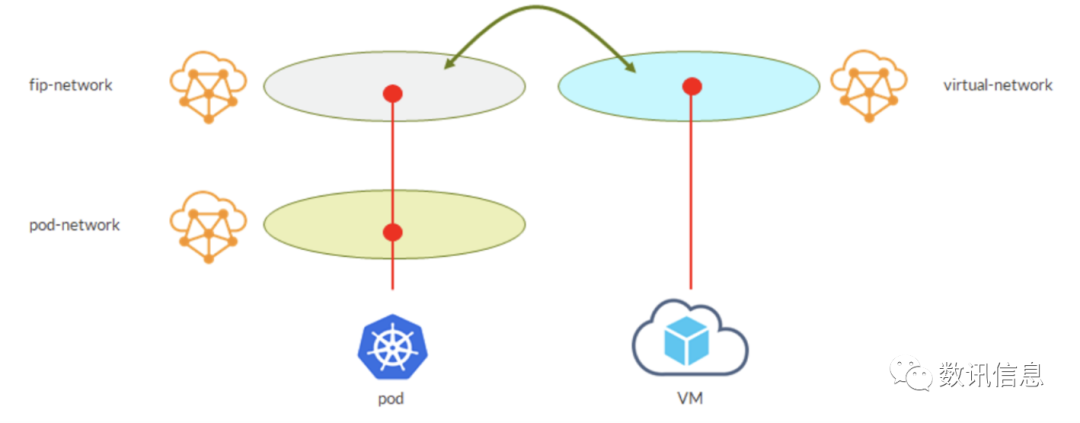

1.Realize the two-way access via policy routers

By default, a Pod network is in itself a VN. Consequently, it can be used as a TFVN in an Openstack environment. Then, we could create a virtual machine in the network that obviously can be connected with our Pod network. It is doable. But, no sensible developer would do that. On the contrary, it’s more meaningful to enable the VM to ‘talk’ with services. In the first use case below, the TF network policy is adopted. In the network translation policy, it is defined (at L4) which traffic could be exchanged between VNs. For example, the policy may be made as: any udp/tcp/icmp … traffic will be permitted. Then, make this policy applicable to both VNA and VNB. Consequently, the VNA and VNB will be able to communicate with each other. To enable such communication, the network policy can be adjusted to cause route leaks between the two VNs. In that way, the VNA knows how get to the endpoints in VNB, without involving other objects like Openstack routers. Here is one of the possible scenarios:

A Possible Scenario:

1.Our service gets external IPs from an external VN;

2.Create a VM in the virtual network created by the user;

3.Apply network policies to the two VNs (to permit the transmission of the traffic we want);

4.In the end, the VM is permitted to access our service;

2. Achieve Two-way Access through L3 VPN routers

Since the external IPs are assigned through an external network, why not directly connect the VM to the same network;

‘Permit a VM to access to the services in the same VN’. It may be a simple choice but never the best choice. Yet at least it shows very clearly how much flexibility and how many options a user may enjoy when a common virtual network layer is built for VNs and VMs.

The virtue network is nothing but a VRF… we learnt all those VRFs through L3 VPN. It also means that vRouter can be seen as the well-known PE. Actually, this metaphor is not too far from the truth. Being the PE that carries VRF (VN), the vRouter can assign route targets to those VRFs (VNs). In addition to make network policies, we may just want to assign the same route targets to the two VNs shown in the last case. By doing so, we could achieve route leak in an implicit way. Since vRouter is a PE, the VRF can be imported to a router, a good old router according to route targets.

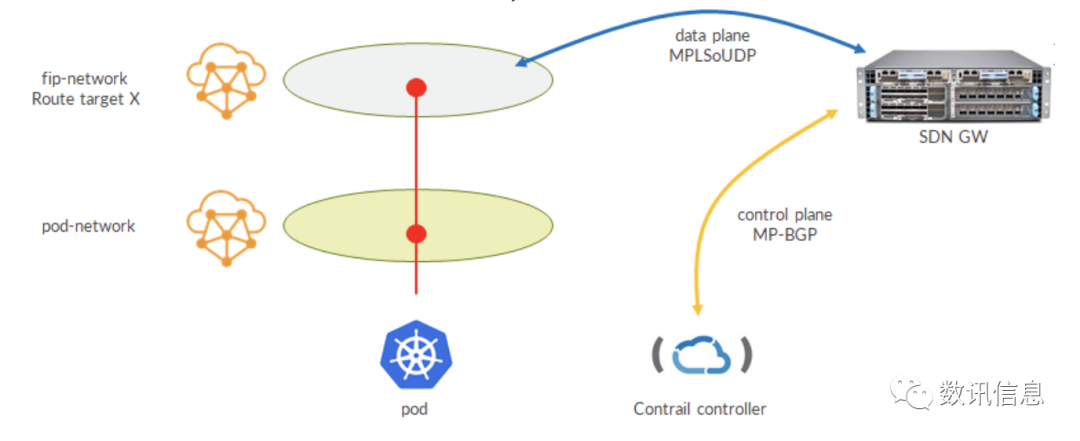

3. Achieve Two-way Access through external Gateway hardware (PNF)

It is a practicable solution and yet, sometimes, it doesn’t work as well as expected. For example, assume you want to translate VM and VN into external translation via SDN GW. The SDN GW is a PE, too. Then, it has a VRF to match route targets. In that case, it is the X. With such setting, we will have in the SDN GW routers coming from VN, external fip-network and VN network. They all have the same RT, which can be seen in the VRF of SDN GW when we try to import/copy Route L3VPN.0 List from BGP. Implicitly, we have also brought the external fip-network out, even though in the beginning we simply want a VM network.

This is just a case for illustration made only to show how to make a use case to fit in with one scenario or another. The good news is that we still have the flexibility that allows us to provide suitable solutions for different situations. Based on what we said above, about the use case, it’s now very clear that the key is to assign route targets in the VN in an external network and prepare a matching VRF in SDN GW:

A Possible Scenario:

1.SDN GW exchanges BGP traffic with TF controller;

2.TF controller sends fip-network route that contains route target X;

3.SDN GW import routes into the bgp.l3vpn.0 list and copy it to VRF according to route targets;

4.SDN GW creates dynamic MPLSoUDP tunnels for the compute nodes that run service Pod;

Here, multiple MPLSoUDP tunnels refer to the fact that the Pod behind all services is running different working procedures (compute nodes). Is it the reason why we want to have more ECMP paths? And, are they all the use cases available?

Of course not, but it could help us to see a lot of key concepts, e.g. namespace isolation, customized Pod network, customized service network, external network, forced route leak through network policy or route targets, etc. Here we should have already realized that we could solve all the problems by combining these elements in different ways and create new use cases and make them support different situations.

In the end, I want to stress that although the SDS is still a follower in Shanghai Cloud computing market, as a long-established IT business operator and datacenter provider, we have deeper insights in the network market and technologies than those who just focus on Clouds. Based on those insights, we have built something really different on the basis of a range of excellent open-source platforms, in the hope of providing a little extra convenience for our enterprise users to better cope with complicated environments.

Author: Mr. Qian Yu, Cloud Computing Business GM of SDS;

About the SDS Cloud Computing Business Department

The SDS Cloud Computing Business Department was founded on January 2nd, 2020. Based on the research heritage that its predecessor, the ex SDS Cloud Computing Development Department, built in the previous five years, it started to bring in advanced resources and leading talents, engage itself in improving the innovative Cloud services that it has independently developed, and have created many Cloud Management platforms to ‘help enterprises with excellent multi-Cloud management and easy, efficient and effective Cloud Operating & Maintenance’.

The SDS Cloud Computing, dedicated to helping enterprises to achieve great development in fields like big data, AI, enterprise digital transformation, with a self-owned IP product, the SDS Cloud!

中文

中文